Azure ML is Microsoft's cloud ML platform that launched as a preview in July 2014 and became generally available in 2018. By now it's grown from a simple web designer into a full MLOps platform supporting Python SDKs, CLI tools, and REST APIs. If you're already stuck in Microsoft hell with Office 365, Teams, and Azure subscriptions, this is probably your least painful option for deploying models.

The main selling point is that it works with the rest of Microsoft's stuff without requiring a PhD in DevOps. You get drag-and-drop interfaces, Python SDKs, or Azure CLI - pick your poison. The drag-and-drop thing is cute until you need to do something it wasn't designed for, then you're back to writing code anyway.

The Real Cost Story

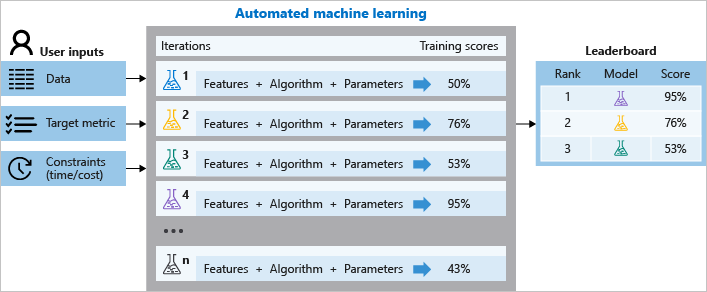

It's "free" like AWS is free - you only pay for compute, storage, and data transfer. Expect $200-500/month minimum for anything real, and $2000+ if you're training anything substantial. GPU instances run $2-10/hour, so those AutoML experiments add up fast.

Here's what Microsoft won't tell you: storage costs creep up because the platform loves creating intermediate datasets. Data egress charges appear out of nowhere when you're moving models around. That "serverless" compute? Still costs money when it's scaling up and down.

What Actually Works

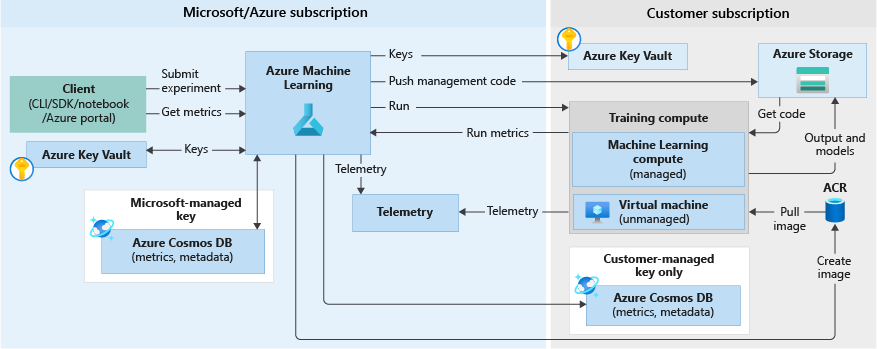

The Microsoft ecosystem integration: If you're using Azure Synapse for data processing, Key Vault for secrets, and already have Azure AD set up, everything just works. No more fighting with IAM policies for 3 hours. The platform connects all the Microsoft services you're probably already paying for - Active Directory handles authentication, DevOps manages your code repos, and Key Vault stores secrets without requiring a degree in IAM policy writing.

The model catalog: They've got hundreds of pre-trained models from OpenAI, Hugging Face, Meta, etc. It's actually decent - you can fine-tune or deploy directly without the usual model conversion nightmares.

MLflow integration: Built-in MLflow means your existing tracking and artifacts work. Git integration means no more "model_v2_final_REALLY_FINAL_FOR_REAL.pkl" bullshit.

What Will Make You Want to Quit

Pipeline failures: Error messages are garbage. "Pipeline failed at step 3" - cool, which step? Why? Go fuck yourself, figure it out. I've spent entire afternoons clicking through logs trying to find the actual error buried 200 lines deep.

AutoML overselling: AutoML works great for toy problems and gives you garbage for anything complex. Budget 2-3 hours for it to run, then another day to figure out if the results are useful or if you should have just used scikit-learn locally. And don't get me started on the documentation - it's better than AWS but that's like saying root canal is better than amputation.

Compute resource quotas: You'll hit quota limits when you least expect it. The "Request increase" button submits a ticket that takes 2-3 business days. Plan accordingly or keep your manager updated on why training is stuck.

Documentation gaps that'll drive you insane: The official docs have gaps big enough to drive a truck through. Stack Overflow has more answers from 2019 than current solutions, which is fucking helpful when you're debugging SDK v2 problems with v1 advice. Check GitHub issues for the real problems people are hitting. The community forums are hit-or-miss but sometimes have gems from Microsoft engineers who actually know what they're talking about.

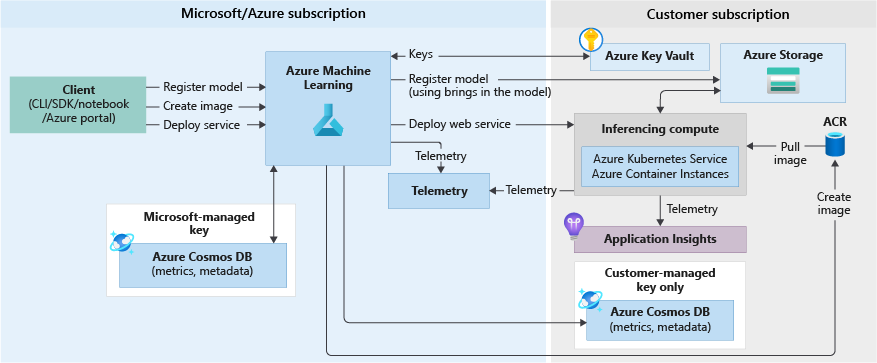

Production deployment disaster: Pushed what should've been a simple model update and the inference endpoint just died. Took like 6 hours to get it back up because Azure ML decided to rebuild everything from scratch. Error logs were useless - just "deployment failed" over and over.

Quota hell: Hit GPU limits right before a big demo. Had to tell the room full of executives that our "scalable cloud solution" needed a support ticket and 2-3 days to work. Good times.

Environment Sync Hell: Your local Python environment works fine. Azure ML's "equivalent" environment fails with cryptic package conflicts that take 3 hours to debug. The curated environments work until you need one custom package, then you're writing Dockerfiles.