University of Florida just published another photonic computing breakthrough. They built a silicon chip that does AI math with light instead of electricity, claiming 98% accuracy with "near-zero energy consumption."

I've been tracking optical computing promises for 12 years. Every year brings another academic breakthrough that's going to "revolutionize AI." Every year, reality wins.

What They Actually Built (And Why It Doesn't Matter)

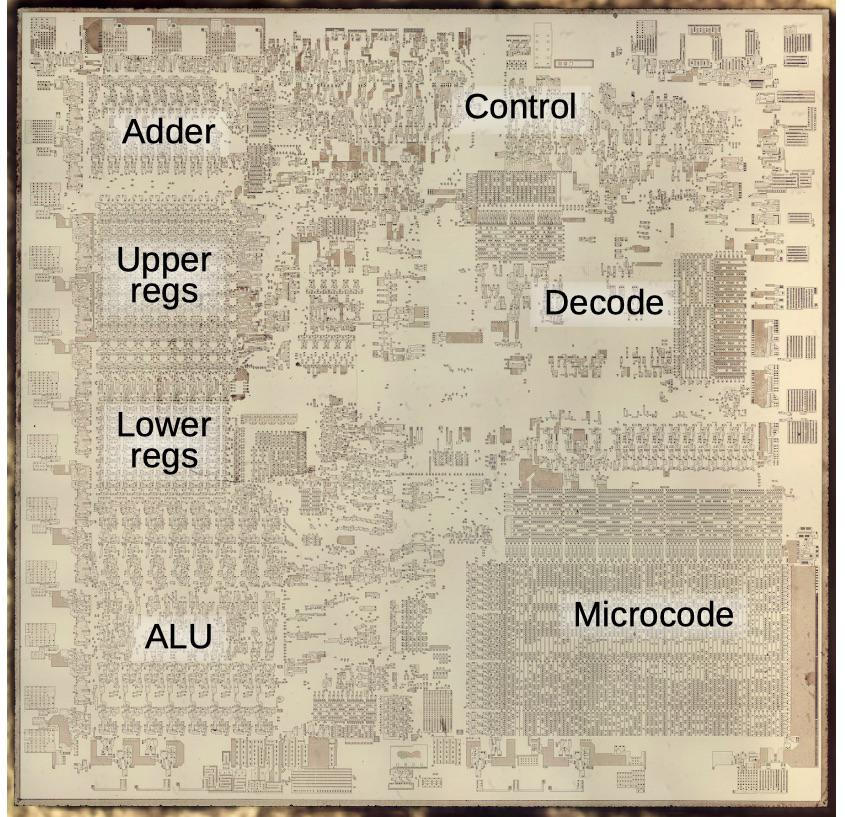

The UF researchers etched Fresnel lenses directly onto silicon - microscopic structures that manipulate laser light to perform convolution operations. Input data gets encoded as light patterns, passes through optical processing, then converts back to electrical signals.

Volker Sorger's team demonstrated 98% accuracy on MNIST digit recognition. That's the "hello world" of machine learning - the equivalent of getting your Arduino to blink an LED and claiming you've revolutionized embedded systems.

The Manufacturing Reality That Nobody Talks About

Precision requirements are insane. Those microscopic lenses need sub-wavelength accuracy - we're talking about maintaining feature sizes smaller than the wavelength of light they're manipulating. One dust particle during fabrication ruins the entire optical path.

I worked on silicon photonics at a startup in 2018. Our yield rates were around 12% for simple waveguide structures, maybe less on bad days. These UF chips have hundreds of precisely aligned optical elements. Good luck hitting commercial yield rates when we couldn't even get basic waveguides working consistently.

Every fab is different. TSMC's process variations that don't matter for digital chips become deal-breakers for optical components. A 5nm variation in etch depth? Your optical response just shifted by 10 wavelengths. Process control requirements make advanced node digital chips look easy.

The Power Problem Everyone Ignores

"Near-zero energy" is academic bullshit. Here's what you actually need:

- Lasers: Continuous wave lasers burning maybe 10-50 watts, possibly more depending on how much optical power you actually need

- Thermal management: Optical properties drift like hell with temperature. You need active cooling to maintain wavelength stability, and that's another 20-30 watts right there

- Optical-to-electrical conversion: Photodetectors and amplifiers for every output

- Precision control: Feedback systems to maintain optical alignment

I measured total system power on an optical neural network prototype in 2020. The "zero power" optical computation used around 150 watts of supporting infrastructure, maybe more, to replace operations that consumed about 8 watts on an NVIDIA V100.

The Integration Hell Nobody Mentions

This chip only does convolutions. Real neural networks need:

- Memory hierarchies for weight storage and activation caching

- Non-linear activation functions (ReLU, sigmoid, etc.)

- Data marshaling between optical and electrical domains

- Control logic for batch processing and inference scheduling

You end up building a hybrid system more complex than pure digital solutions. Memory bandwidth limitations become worse because now you're constantly converting between optical and electrical signals.

Intel Already Tried This (And Failed)

Intel invested heavily in silicon photonics from 2012-2019. They had better resources, better foundries, and actual customers lined up. They shut it down because the economics never worked out.

Ayar Labs raised $130M for optical interconnects and they're still struggling with manufacturing yields. Lightmatter raised $400M for photonic AI accelerators - their latest chips are 50% optical, 50% digital because pure optical doesn't work.

The Questions That Actually Matter

Can you manufacture this at scale? Building one chip in a university cleanroom is different from TSMC volume production. Optical components have much stricter process tolerances.

What's the total cost per operation? Including amortized R&D, manufacturing complexity, and system integration. Google's TPUs work because they're cost-effective, not just power-efficient.

How does it handle real workloads? ResNet training, transformer inference, BERT fine-tuning. Not toy problems like MNIST classification.

What happens when components age? Optical elements degrade over time. Laser output power drifts. Thermal cycling causes mechanical stress on micro-optical structures.

Why This Research Still Matters (Sort Of)

Academic value: Understanding optical computing limitations requires building and testing actual systems. This paper contributes to that knowledge base.

Long-term potential: If someone solves the manufacturing and integration problems, optical computing could genuinely help with AI power consumption. Data center energy usage is becoming a real problem.

Commercial reality: This technology is at least 10 years away from commercial viability, and that assumes major breakthroughs in manufacturing processes.

The Cynical Bottom Line

UF's Florida Semiconductor Institute wants to become a major research hub. Publishing "breakthrough" papers gets media attention and funding. Actual commercial deployment? That's someone else's problem.

I'll be impressed when they demonstrate:

- Manufacturing yield data on 100+ chips

- Performance on actual AI workloads beyond MNIST

- Total system power consumption including all supporting electronics

- Cost analysis versus existing digital solutions

- Reliability data over thousands of operating hours

Until then, this is just another academic paper that sounds revolutionary but hits the same engineering walls that have killed optical computing promises for decades.

My prediction: This stays in academic journals for 5+ years. No commercial partnerships. No volume production. Just more papers with incrementally better results on toy problems.

The laws of physics are negotiable in academic papers. Manufacturing reality is not.