Didn't plan to migrate off Pinecone.

Was working fine. Then our bill tripled overnight because we hit some usage threshold nobody mentioned. That's when I started looking at alternatives.

Bill Went Insane Without Warning

Woke up to a Slack notification

- bill jumped from like $800 to over $3000. Turns out we crossed into their next pricing tier. Nobody at Pinecone bothered to warn us this was coming. Customer support took two days to even respond when I asked what happened.

CEO wasn't thrilled. Started asking why our search feature costs more than our entire database infrastructure. Fair question.

What pushed me to actually do something:

- No warning about pricing tiers

- Support was useless when we needed answers

- Realized we had zero negotiating power for renewals

- Bill was growing faster than our user base

Started researching alternatives while still pissed about the bill.

Found plenty of options but switching is harder than you'd think.

Why Teams Actually Switch (It's Not Just Money)

Sure, saving 60% on your vector database bill is nice.

But there's other reasons that push teams over the edge:

Security guy kept asking where the hell our data lives

- Try explaining to your CISO that vectors are "in the cloud somewhere".

He was not amused. At least with Qdrant's VPC deployment I can point to our own infrastructure. Self-hosted vector databases give you actual control over your data residency, unlike cloud-only solutions that keep everything in their black box.

They won't build the shit we need

- Pinecone has strong opinions about vector search.

Need custom distance metrics?

Good luck. Want to store big metadata payloads?

Nope. pgvector lets you do whatever weird stuff you need, and Weaviate's multi-modal capabilities actually support complex use cases instead of forcing you into their box.

CTO paranoia about vendor lock-in

- He kept asking "what if they jack up prices during renewal?" Fair point.

When you're stuck, they know it. Sales gets pushy. Open source alternatives eliminate this problem entirely, and even managed open source options give you export capabilities that proprietary vendors block.

Reality Check on Migration Difficulty

Most teams underestimate how much work this is. Database migration best practices apply here, but vector databases have their own special hellscape of issues:

Pretty straightforward if:

- Basic cosine similarity search only

- Simple metadata (no complex filters)

- Can handle brief downtime during index rebuilds

- Team comfortable with Docker deployments and container orchestration

Prepare for pain if:

- Heavy use of namespaces for multi-tenancy

- Complex metadata filtering logic scattered across your codebase

- Need zero-downtime migration patterns

- Team panics at the sight of Kubernetes YAML

How Long This Actually Takes

Blog posts claiming \"migrate in a weekend\" are bullshit.

Here's what actually happened, backed up by real migration case studies:

Month 1-ish

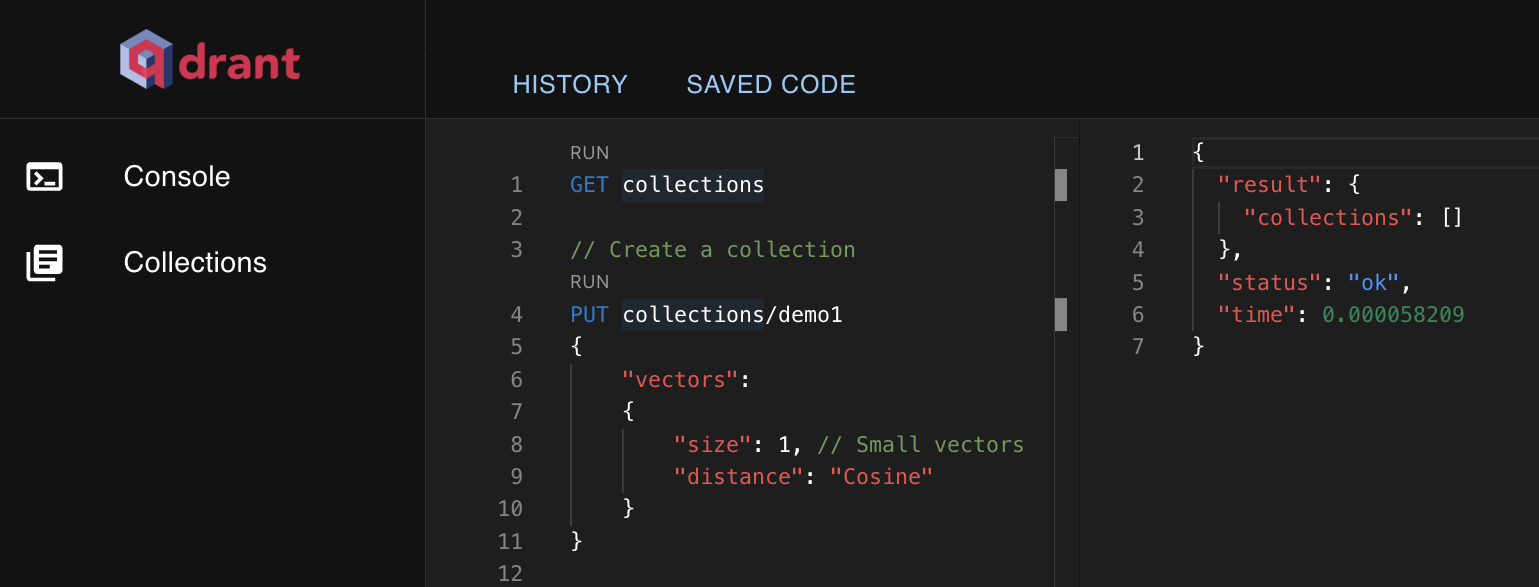

- Spin up Qdrant locally, test with some data.

Took way longer than expected because filtering syntax is totally different and I had to hunt down filter code in like 15 files. Vector database migration tools help but semantic differences between platforms always bite you.

Month 2-ish

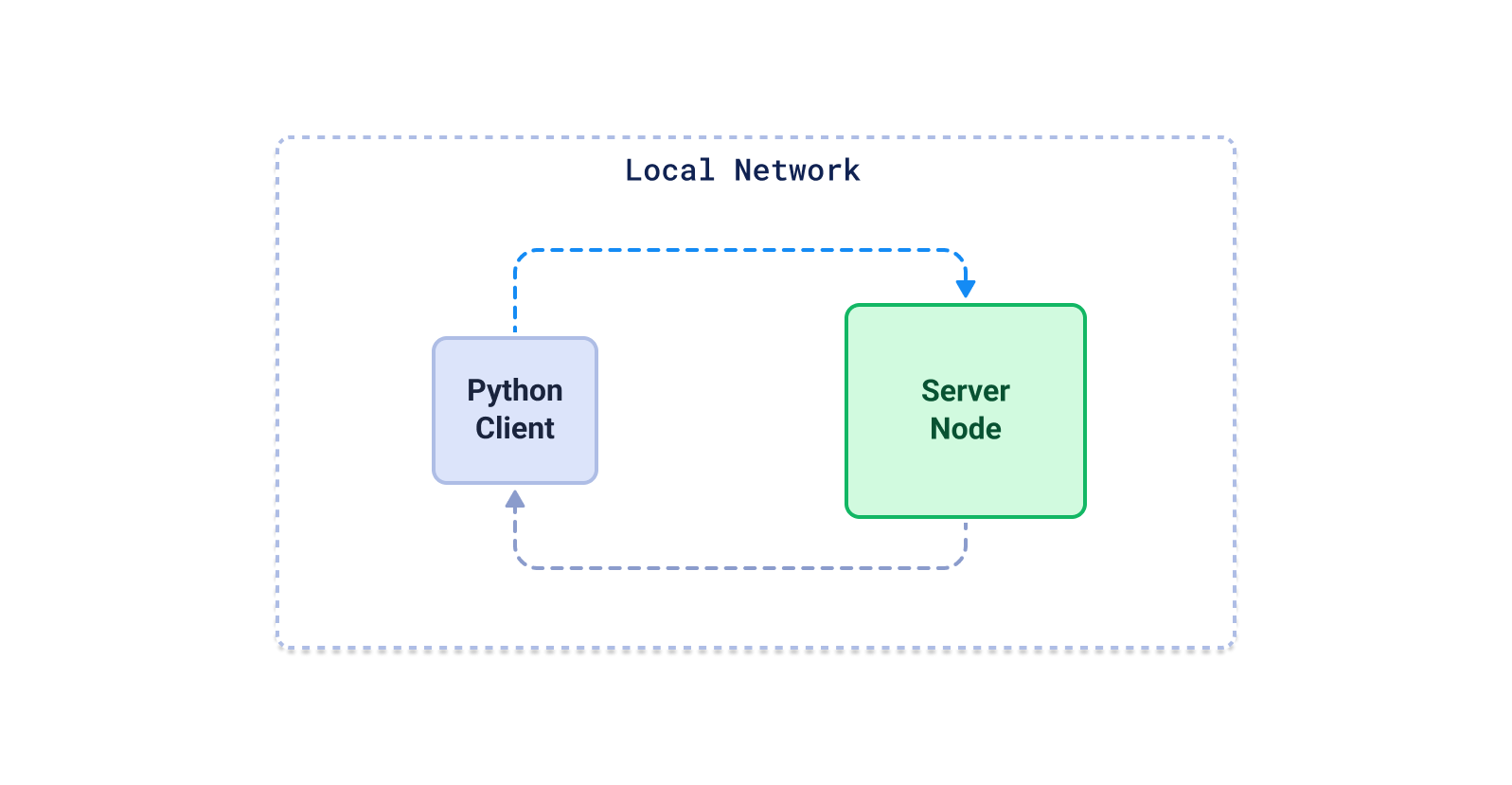

- Set up production infrastructure. Kubernetes made me want to drink.

Gave up and used Qdrant Cloud after a week of YAML hell. Self-managed vector databases require serious infrastructure expertise.

Month 3-ish

- Fix all the edge cases testing didn't catch.

There's always more bullshit that breaks only in prod. Production vector database performance differs significantly from local development, and query optimization becomes critical.

Month 4-ish

- Actually migrate production data.

Ran both systems in parallel until I was confident nothing was fucked. Blue-green deployment patterns work well for vector database migrations.

Took me around 4 months total. Could probably do it faster but you don't want to rush this shit. Database migration projects consistently take longer than expected.